The last time I wrote about the network-attached storage (NAS) appliance that the good folks at Synology had sent my way, I spent a lot of time talking about how amazed I was at all the things that NAS appliances could do these days. They truly have come a very long way in the last decade or so.

Once I got done gushing about the DiskStation DS220+ that I had sitting next to my primary work area, I realized that I should probably do a post about it that amounted to more than a “fanboy rant.”

This is an attempt at “that post” and contains some relevant specifics on the DS220+’s capabilities as well as some summary words about my roughly five or six months of use.

First Up: Business

As the title of this post alluded to, I’ve found uses for the NAS that would be considered “work/business,” others that would be considered “play/entertainment,” and some that sit in-between. I’m going to start by outlining the way I’ve been using it in my work … or more accurately, “for non-play purposes.”

But first: one of the things I found amazing about the NAS that really isn’t a new concept is the fact that Synology maintains an application site (they call it the “Package Center“) that is available directly from within the NAS web interface itself:

Much like the application marketplaces that have become commonplace for mobile phones, or the Microsoft Store which is available by default to Windows 10 installations, the Package Center makes it drop-dead-simple to add applications and capabilities to a Synology NAS appliance. The first time I perused the contents of the Package Center, I kind of felt like a kid in a candy store.

With all the available applications, I had a hard time staying focused on the primary package I wanted to evaluate: Active Backup for Microsoft 365.

With all the available applications, I had a hard time staying focused on the primary package I wanted to evaluate: Active Backup for Microsoft 365.

Backup and restore, as well as Disaster Recovery (DR) in general, are concepts I have some history and experience with. What I don’t have a ton of experience with is the way that companies are handling their DR and BCP (business continuity planning) for cloud-centric services themselves.

What little experience I do have generally leads me to categorize people into two different camps:

- Those who rely upon their cloud service provider for DR. As a generalization, there are plenty of folks that rely upon their cloud service provider for DR and data protection. Sometimes folks in this group wholeheartedly believe, right or wrong, that their cloud service’s DR protection and support are robust. Oftentimes, though, the choice is simply made by default, without solid information, or simply because building one’s own DR plan and implementing it is not an inexpensive endeavor. Whatever the reason(s), folks in this group are attached at the hip to whatever their cloud service provider has for DR and BCP – for better or for worse.

- Those who don’t trust the cloud for DR. There are numerous reasons why someone may choose to augment a cloud service provider’s DR approach with something supplemental. Maybe they simply don’t trust their provider. Perhaps the provider has a solid DR approach, but the RTO and RPO values quoted by the provider don’t line up with the customer’s specific requirements. It may also be that the customer simply doesn’t want to put all of their DR eggs in one basket and wants options they control.

Microsoft 365 Data Protection

On the specific topic of Microsoft 365 data protection, I tend to sit solidly in the middle of the two extremes I just described. I know that Microsoft takes steps to protect 365 data, but good luck finding a complete description or metrics around the measures they take. If I had to recover some data, I’m relatively (but not entirely) confident I could open a service ticket, make the request, and eventually get the data back in some form.

The problem with this approach is that it’s filled with assumptions and not a lot of objective data. I suspect part of the reason for this is that actual protection windows and numbers are always evolving, but I just don’t know.

You can’t throw a stick on the internet and not hit a seemingly endless supply of vendors offering to fill the hole that exists with Microsoft 365 data protection. These tools are designed to afford customers a degree of control over their data protection. And as someone who has talked about DR and BCP for many years now, redundancy of data protection is never a bad thing.

Introducing the NAS Solution

And that brings me back to Synology’s Active Backup for Microsoft 365 package.

In all honesty, I wasn’t actually looking for supplemental Microsoft 365 data protection at the time. Knowing the price tag on some of the services and packages that are sold to address protection needs, I couldn’t justify (as a “home user”) the cost.

I was pleasantly surprised to learn that the Synology solution/package was “free” – or rather, if you owned one of Synology’s NAS devices, you had free access to download and use the package on your NAS.

The price was right, so I decided to install the package on my DS220+ and take it for a spin.

Kicking The Tires

First impressions and initial experiences mean a lot to me. For the brief period of time when I was a product manager, I knew that a bad first experience could shape someone’s entire view of a product.

I am therefore very happy to say that the Synology backup application was a breeze to get setup – something I initially felt might not be the case. The reason for my initial hesitancy was due to the fact that applications and products that work with Microsoft 365 need to be registered as trusted applications within the M365 tenant they’re targeting. Most of the products I’ve worked with that need to be setup in this capacity involve a fair amount manual legwork: certificate preparation, finding and granting permissions within a created app registration, etc.

Not Synology’s backup package. From the moment you press the “Create” button and indicate that you want to establish a new backup of Microsoft 365 data, you’re provided with solid guidance and hand-holding throughout the entire setup and app registration process. Of all of the apps I’ve registered in Azure, Synology’s process and approach has been the best – hands-down. It took no more than five minutes to establish a recurring backup against a tenant of mine.

I’ve included a series of screenshots (below) that walk through the backup setup process.

What Goes In, SHOULD Come Out ...

When I would regularly speak on data protection and DR topics, I had a saying that I would frequently share: “Backup is science, but Restore is an art.” A decade or more ago, those tasked with backing up server-resident data often took a “set it and forget it” approach to data backups. And when it came time to restore some piece of data from those backups, many of the folks who took such an approach would discover (to their horror) that their backups had been silently failing for weeks or months.

Motto of the story (and a 100-level lesson in DR): If you establish backups, you need to practice your restore operations until you’re convinced they will work when you need them.

Synology approaches restoration in a very straightforward fashion that works very well (at least in my use case). There is a separate web portal from which restores and exports (from backup sets) are conducted.

And in case you’re wondering: yes, this means that you can grant some or all of your organization (or your family, if you’re like me) self-service backup capabilities. Backup and restore are handled separately from one another.

As the series of screenshots below illustrates, there are five slightly different restore presentations for each of the five areas backed up by the Synology package: (OneDrive) Files, Email, SharePoint Sites, Contacts, and Calendars. Restores can be performed from any backup set and offer the ability to select the specific files/items to recover. The ability to do an in-place restore or an export (which is downloaded by the browser) is also available for all items being recovered. Pretty handy.

Will It Work For You?

I’ve got to fall-back to the SharePoint consultant’s standard answer: it depends.

I see something like this working exceptionally well for small-to-mid-sized organizations that have smaller budgets and already overburdened IT staff. Setting up automated backups is a snap, and enabling users to get their data back without a service ticket and/or IT becoming the bottleneck is a tremendous load off of support personel.

My crystal ball stops working when we’re talking about larger companies and enterprise scale. All sorts of other factors come into play with organizations in this category. A NAS, regardless of capabilities, is still “just” a NAS at the end of the day.

My DS220+ has two-2TB drives in it. I/O to the device is snappy, but I’m only one user. Enterprise-scale performance isn’t something I’m really equipped to evaluate.

Then there are the questions of identity and Active Directory implementation. I’ve got a very basic AD implementation here at my house, but larger organizations typically have alternate identity stores, enforced group policy objects (GPOs), and all sorts of other complexities that tend to produce a lot of “what if” questions.

Larger organizations are also typically interested in advanced features, like integration with existing enterprise backup systems, different backup modes (differential/incremental/etc.), deduplication, and other similar optimizations. The Synology package, while complete in terms of its general feature set, doesn’t necessarily possess all the levers, dials, and knobs an enterprise might want or need.

So, I happily stand by my “solid for small-to-mid-sized companies” outlook … and I’ll leave it there. For no additional cost, Synology’s Active Backup for Microsoft 365 is a great value in my book, and I’ve implemented it for three tenants under my control.

Rounding Things Out: Entertainment

I did mention some “play” along with the work in this post’s title – not something that everyone thinks about when envisioning a network storage appliance. Or rather, I should say that it’s not something I had considered very much.

My conversations with the Synology folks and trips through the Package Center convinced me that there were quite a few different ways to have fun with a NAS. There are two packages I installed on my NAS to enable a little fun.

Package Number One: Plex Server

Admittedly, this is one capability I knew existed prior to getting my DS220+. I’ve been an avid Plex user and advocate for quite a few years now. When I first got on the Plex train in 2013, it represented more potential than actual product.

Nowadays (after years of maturity and expanding use), Plex is a solid media server for hosting movies, music, TV, and other media. It has become our family’s digital video recorder (DVR), our Friday night movie host, and a great way to share media with friends.

I’ve hosted a Plex Server (self-hosted virtual machine) for years, and I have several friends who have done the same. At least a few of my friends are hosting from NAS devices, so I’ve always had some interest in seeing how Plex would perform on NAS device versus my VM.

As with everything else I’ve tried with my DS220+, it’s a piece of cake to actually get a Plex Server up-and-running. Install the Plex package, and the NAS largely takes care of the rest. The sever is accessible through a browser, Plex client, or directly from the NAS web console.

I’ve tested a bit, but I haven’t decommissioned the virtual machine (VM) that is my primary Plex Server – and I probably won’t. A lot of people connect to my Plex Server, and that server has had multiple transcodes going while serving up movies to multiple concurrent users – tasks that are CPU, I/O, and memory intensive. So while the NAS does a decent job in my limited testing here at the house, I don’t have data that convinces me that I’d continue to see acceptable performance with everyone accessing it at once.

One thing that’s worth mentioning: if you’re familiar with Plex, you know that they have a pretty aggressive release schedule. I’ve seen new releases drop on a weekly basis at times, so it feels like I’m always updating my Plex VM.

What about the NAS package and updates? Well, the NAS is just as easy to update. Updated packages don’t appear in the Package Center with the same frequency as the new Plex Server releases, and you won’t get the same one-click server update support (a feature that never worked for me since I run Plex Server non-interactively in a VM), but you do get a link to download a new package from the NAS’s update notification:

The “Download Now” button initiates the download of an .SPK file – a Synology/NAS package file. The package file then needs to be uploaded from within the Package Center using the “Manual Install” button:

And that’s it! As with most other NAS tasks, I would be hard-pressed to make the update process any easier.

Package Number Two: Docker

If you read the first post I wrote back in February as a result of getting the DS220+, you might recall me mentioning Docker as another of the packages I was really looking forward to taking for a spin.

The concept of containerized applications has been around for a while now, and it represents an attractive alternative to establishing application functionality without an administrator or installer needing to understand all of the ins and outs of a particular application stack, its prerequisites and dependencies, etc. All that’s needed is a container image and host.

So, to put it another way: there are literally millions of Docker container images available that you could download and get running in Docker with very little time invested on your part to make a service or application available. No knowledge of how to install, configure, or setup the application or service is required on your part.

Let's Go Digging

One container I had my eye on from the get-go was itzg’s Minecraft Server container. itzg is the online handle used by a gentleman named Geoff Bourne from Texas, and he has done all of the work of preparing a Minecraft server container that is as close to plug-and-play as containers come.

Minecraft (for those of you without children) is an immensely popular game available on many platforms and beloved by kids and parents everywhere. Minecraft has a very deep crafting system and focuses on building and construction rather than on “blowing things up” (although you can do that if you truly want to) as so many other games do.

My kids and I have played Minecraft together for years, and I’ve run various Minecraft servers in that time that friends have joined us in play. It isn’t terribly difficult to establish and expose a Minecraft server, but it does take a little time – if you do it “manually.”

I decided to take Docker for a run with itzg’s Minecraft server container, and we were up-and-running in no time. The NAS Docker package has a wonderful web-based interface, so there’s no need to drop down to a command line – something I appreciate (hey, I love my GUIs). You can easily make configuration changes (like swapping the TCP port that responds to game requests), move an existing game’s files onto/off of the NAS, and more.

I actually decided to move our active Minecraft “world” (in the form of the server data files) onto the NAS, and we ran the game from the NAS for about two months. Although we had some unexpected server stops, the NAS performed admirably with multiple players concurrently. I suspect the server stops were actually updates of some form taking place rather than a problem of some sort.

The NAS-based Docker server performed admirably for everything except Elytra flight. In all fairness, though, I haven’t been on a server of any kind yet where Elytra flight works in a way I’d describe as “well” largely because of the I/O demands associated with loading/unloading sections of the world while flying around.

Conclusion

After a number of months of running with a Synology NAS on my network, I can’t help but say again that I am seriously impressed by what it can do and how it simplifies a number of tasks.

I began the process of server consolidation years ago, and I’ve been trying to move some tasks and operations out to the cloud as it becomes feasible to do so. Where it wouldn’t have even resulted in a second thought to add another Windows server to my infrastructure, I’m now looking at things differently. Anything a NAS can do more easily (which is the majority of what I’ve tried), I see myself trying it there first.

I once had an abundance of free time on my hands. But that was 20 – 30 years ago. Nowadays, I’m in the business of simplifying and streamlining as much as I can. And I can’t think of a simpler approach for many infrastructure tasks and needs than using a NAS.

References and Resources

- Blog Post: The Gift of NAS

- NAS Company: Synology

- NAS Appliance: DiskStation DS220+

- Synology: Add-on Packages

- Synology: Active Backup for Microsoft 365

- Blog Page: DR Guide 2010

- Blog Post: RPO and RTO: Prerequisites for Informed SharePoint Disaster Recovery Planning

- Search: Microsoft 365 Data Protection

- Synology: How do I register an Azure AD application for Active Backup for Microsoft 365?

- Site: Plex

- Site: Docker

- Docker: What is a Container?

- Docker Hub: Container Images

- Docker Humb: itzg/minecraft-server

- Github: Geoff Bourne

- Game: Minecraft

- Minecraft Wiki: Crafting

- Minecraft Wiki: Elytra

I’ve been operating under the

I’ve been operating under the  UPS configuration via PowerShell isn’t new territory. There’s a pretty substantial base of online material to draw from for guidance and inspiration (

UPS configuration via PowerShell isn’t new territory. There’s a pretty substantial base of online material to draw from for guidance and inspiration (

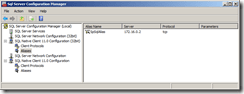

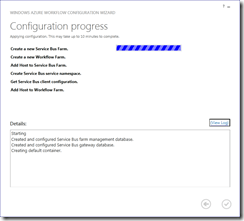

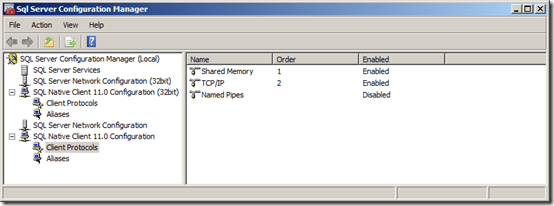

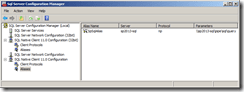

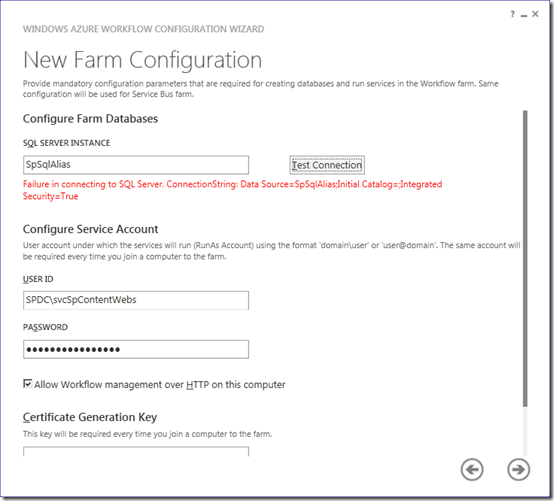

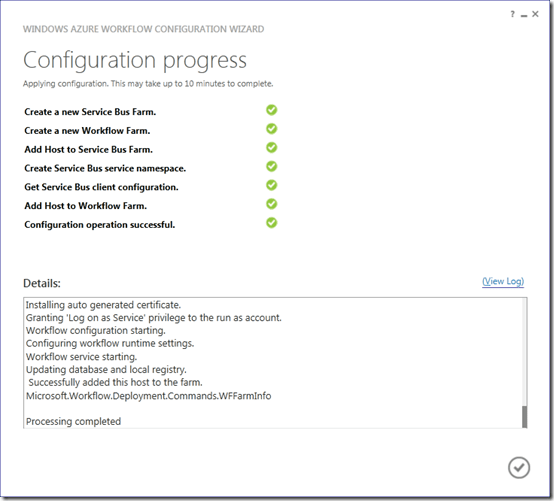

I’ve been doing a bit of build-out with the new SharePoint 2013 Preview in anticipation of some development work, and

I’ve been doing a bit of build-out with the new SharePoint 2013 Preview in anticipation of some development work, and

You’ve undoubtedly heard the news: SharePoint 2013 is coming. The preview is available right now, and you can

You’ve undoubtedly heard the news: SharePoint 2013 is coming. The preview is available right now, and you can