I’ve been meaning to do a small write-up on a couple of key Object Cache points, but other things kept trumping my desire to put this post together. I finally found the nudge I needed (or rather, gave myself a kick in the butt) after discussing the topic a bit with Andrew Connell following a presentation he gave at a SharePoint Users of Indiana user group meeting. Thanks, Andrew!

A Brief Bit of Background

As I may have mentioned in a previous post, I’ve spent the bulk of the last two years buried in a set of Internet-facing MOSS publishing sites that are the public presence for my current client. Given that my current client is a Fortune 50 company, it probably comes as no surprise when I say that the sites see quite a bit of daily traffic. Issues due to poor performance tuning and inefficient code have a way of making themselves known in dramatic fashion.

Some time ago, we were experiencing a whole host of critical performance issues that ultimately stemmed from a variety of sources: custom code, infrastructure configuration, cache tuning parameters, and more. It took a team of Microsoft experts, along with professionals working for the client, to systematically address each item and bring operations back to a “normal” state. Though we ultimately worked through a number of different problem areas, one area in particular stood out: the MOSS Object Cache and how it was “tuned.”

What is the MOSS Object Cache?

The MOSS Object Cache is memory that’s allocated on a per-site collection basis to store commonly-accessed objects, such as navigational data, query results (cross-list and cross-site), site properties, page layouts, and more. Object Caching should not be confused with Page Output Caching (which is an extension of ASP.NET’s built-in page caching capability) or BLOB Caching/Disk-Based Caching (which uses the local server’s file system to store images, CSS, JavaScript, and other resource-type objects).

Publishing sites make use of the Object Cache without any intervention on the part of administrators. By default, a publishing site’s Object Cache receives up to 100MB of memory for use when the site collection is created. This allocation can be seen on the Object Cache Settings site collection administration page within a publishing site:

Note that I said that up to 100MB can be used by the Object Cache by default. The size of the allocation simply determines how large the cache can grow in memory before item ejection, flushing, and possible compactions result. The maximum cache size isn’t a static allocation, so allocating 500MB of memory, for example, won’t deprive the server of 500MB of memory unless the amount of data going into the cache grows to that level. I’m taking a moment to point this out because I wasn’t (personally) aware of this when I first started working with the Object Cache. This point also becomes a relevant point in a story I’ll be telling in a bit.

Microsoft’s TechNet site has an article that provides pretty good coverage of caching within MOSS (including the Object Cache), so I’m not going to go into all of the details it covers in this post. I will make the assumption that the information presented in the TechNet article has been read and understood, though, because it serves as the starting point for my discussion.

Object Cache Memory Tuning Basics

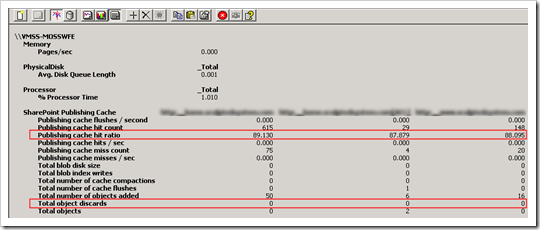

The TechNet article indicates that two specific indicators should be watched for tuning purposes. Those two indicators, along with their associated performance counters, are

- Cache hit ratio (SharePoint Publishing Cache/Publishing cache hit ratio)

- Object discard rate (SharePoint Publishing Cache/Total object discards)

The image below shows these counters highlighted on a MOSS WFE where all SharePoint Publishing Cache counters have been added to a Performance Monitor session:

According to the article, the Publishing cache hit ratio should remain above 90% and a low object discard rate should be observed. This is good advice, and I’m not saying that it shouldn’t be followed. In fact, my experience has shown Publishing cache hit ratio values of 98%+ are relatively common for well-tuned publishing sites possessing largely static content.

The “Dirty Little Secret” about the Publishing Cache Hit Ratio Counter

As it turns out, though, the Publishing cache hit ratio counter should come with a very large warning that reads as follows:

WARNING: This counter only resets with a server reboot. Data it displays has been aggregating for as long as the server has been up.

This may not seem like such a big deal, particularly if you’re looking at a new site collection. Let me share a painful personal experience, though, that should drive home how important a point this really is.

I was attempting to do a little Object Cache tuning for a client to help free up some memory to make application pool recycles cleaner, and I was attempting to see if I could adjust the Object Cache allocations for multiple (about 18) site collections downward. We were getting into a memory-constrained position, and a review of the Publishing cache hit ratio values for the existing site collections showed that all sites were turning in 99%+ cache hit ratios. Operating under the (previously described) mistaken assumption that Object Cache memory was statically allocated, I figured that I might be able to save a lot of memory simply by adjusting the memory allocations downward.

Mistaken understanding in mind, I went about modifying the Object Cache allocation for one of the site collections. I knew that we had some data going into the cache (navigational data and a few cross-list query result sets), so I figured that we couldn’t have been using a whole lot of memory. I adjusted the allocation down dramatically (to 10MB) on the site collection and I periodically checked back over the course of several hours to see how the Publishing cache hit ratio fared.

After a chunk of the day had passed, I saw that the Publishing cache hit ratio remained at 99%+. I considered my assumption and understanding about data going into the Object Cache to be validated, and I went on my way. What I didn’t realize at the time was that the actual Publishing cache hit ratio counter value was driven by the following formula:

Publishing cache hit ratio = total cache hits / (total cache hits + total cache misses) * 100%

Note the pervasive use of the word “total” in the formula. In my defense, it wasn’t until we engaged Microsoft and made requests (which resulted in many more internal requests) that we learned the formulas that generate the numbers seen in many of the performance counters. To put it mildly, the experience was “eye opening.”

In reality, the site collection was far from okay following the tuning I performed. It truly needed significantly more than the 10MB allocation I had given it. If it were possible to reset the Publishing cache hit ratio counter or at least provide a short-term snapshot/view of what was going on, I would have observed a significant drop following the change I made. Since our server had been up for a month or more, and had been doing a good job of servicing requests from the cache during that time, the sudden drop in objects being served out of the Object Cache was all but undetectable in the short-term using the Publishing cache hit ratio.

To spell this out even further for those who don’t want to do the math: a highly-trafficked publishing site like one of my client’s sites may service 50 million requests from the Object Cache over the course of a month. Assuming that the site collection had been up for a month with a 99% Object Cache hit ratio, plugging the numbers into the aforementioned formula might look something like this:

Publishing cache hit ratio = 49500000 / (49500000 + 500000) * 100% = 99.0%

50 million Object Cache requests per month breaks down to about 1.7 million requests per day. Let’s say that my Object Cache adjustment resulted in an extremely pathetic 10% cache hit ratio. That means that of 1.7 million object requests, only 170000 of them would have been served from the Object Cache itself. Even if I had watched the Publishing cache hit ratio counter for the entire day and seen the results of all 1.7 million requests, here’s what the ratio would have looked like at the end of the day (assuming one month of uptime):

Publishing cache hit ratio = 51200000 / (51200000 + 2030000) * 100% = 96.2%

Net drop: only about 2.8% over the course of the entire day!

Seeing this should serve as a healthy warning for anyone considering the use the Publishing cache hit ratio counter alone for tuning purposes. In publishing environments where server uptime is maximized, the Publishing cache hit ratio may not provide any meaningful feedback unless the sampling time for changes is extended to days or even weeks. Such long tuning timelines aren’t overly practical in many heavily-trafficked sites.

So, What Happens When the Memory Allocation isn’t Enough?

In plainly non-technical terms: it gets ugly. Actual results will vary based on how memory starved the Object Cache is, as well as how hard the web front-ends (WFEs) in the farm are working on average. As you might expect, systems under greater stress or load tend to manifest symptoms more visibly than systems encountering lighter loads.

In my case, one of the client’s main sites was experiencing frequent Object Cache thrashing, and that led to spells of extremely erratic performance during times when flushes and cache compactions were taking place. The operations I describe are extremely resource intensive and can introduce blocking behavior in the request pipeline. Given the volume of requests that come through the client’s sites, the entire farm would sometimes drop to its knees as the Object Cache struggled to fill, flush, and serve as needed. Until the problem was located and the allocation was adjusted, a lot of folks remained on-call.

Tuning Recommendations

First and foremost: don’t adjust the size of the Object Cache memory allocation downwards unless you’ve got a really good reason for doing so, such as extreme memory constraints or some good internal knowledge indicating that the Object Cache simply isn’t being used in any substantial way for the site collection in question. As I’ve witnessed firsthand, the performance cost of under-allocating memory to the Object Cache can be far worse than the potential memory savings gained by tweaking.

Second, don’t make the same mistake I made and think that the Object Cache memory allocation is a static chunk of memory that’s claimed by MOSS for the site collection. The Object Cache uses only the memory it needs, and it will only start ejecting/flushing/compacting the Object Cache after the cache has become filled to the specified allocation limit.

And now, for the $64,000-contrary-to-common-sense tip …

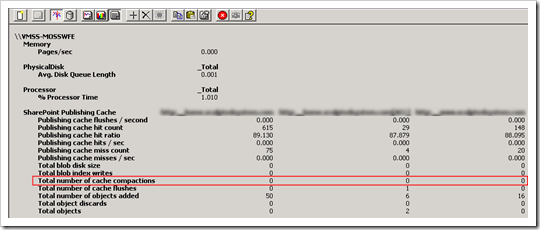

For tuning established site collections and the detection of thrashing behavior, Microsoft actually recommends using the Object Cache compactions performance counter (SharePoint Publishing Cache/Total number of cache compactions) to guide Object Cache memory allocation. Since cache compactions represent the greatest threat to ongoing optimal performance, Microsoft concluded (while working to help us) that monitoring the Total number of cache compactions counter was the best indicator of whether or not the Object Cache was memory starved and in trouble:

Steve Sheppard (a very knowledgeable Microsoft Escalation Engineer with whom I worked and highly recommend) wrote an excellent blog post that details the specific process he and the folks at Microsoft assembled to use the Total number of cache compactions counter in tuning the Object Cache’s memory allocation. I recommend reading his post, as it covers a number of details I don’t include here. The distilled guidelines he presents for using the Total number of cache compactions counter basically break counter values into three ranges:

- 0 or 1 compactions per hour: optimal

- 2 to 6 compactions per hour: adequate

- 7+ compactions per hour: memory allocation insufficient

In short: more than six cache compactions per hour is a solid sign that you need to adjust the site collection’s Object Cache memory allocation upwards. At this level of memory starvation within the Object Cache, there are bound to be secondary signs of performance problems popping up (for example, erratic response times and increasing ASP.NET request queue depth).

Conclusion

We were able to restore Object Cache performance to acceptable levels (and adjust our allocation down a bit), but we lacked good guidance and a quantifiable measure until the Total number of cache compactions performance counter came to light. Keep this in your back pocket for the next time you find yourself doing some tuning!

Addendum

I owe Steve Sheppard an additional debt of gratitude for keeping me honest and cross-checking some of my earlier statements and numbers regarding the Publishing cache hit ratio. Though the counter values persist beyond an IISReset, I had incorrectly stated that they persist beyond a reboot and effectively never reset. The values do reset, but only after a server reboot. I’ve updated this post to reflect the feedback Steve supplied. Thank you, Steve!

Additional Reading and References

- User Group: SharePoint Users of Indiana

- Blog: Andrew Connell

- TechNet: Caching In Office SharePoint Server 2007

- Blog: Steve Sheppard

Great material, you don’t see too many posts with this level of detail for SharePoint. For each site collection, I’m seeing 4 counters, a pattern along the lines of:

– the address itself

– the address itself + ‘[ACL]’ appended

– the address lower-cased + ‘[ACL]#1’ appended

– the address lower-cased + ‘#1’ appended

All of them are registering slightly different values, which one is the authority?

Good question, Nariman. I don’t have a definitive answer for you, and I’ll be up front and say that it’s been quite a while since I’ve played with these counters. Having said that, I’ll hazard some guesses.

I’ll start by admitting that I’ve never seen the ‘[ACL]#1’ and ‘#1’ instances you mentioned. My understanding is that the difference between the address-only counter set and the address + [ACL] set involves security and whether or not the counter values factor it in. If you have another “set” of counter instances (in your case, the ones including a ‘#1’), perhaps your Web app is extended to additional zones (resulting in additional IIS sites) or makes use of different authentication providers and/or FBA? That question/suggestion is purely extrapolation and guesswork on my part.

When I was doing my earlier troubleshooting work (when the article was written), I know that it was clear to me after some monitoring which set of counters I should be watching. One counter set reflected very little traffic and/or activity, and the other column showed a tremendous amount.

I would guess that where you should look will be a function of how traffic comes into your site collection — or more specifically, through which Web application in your environment the requests are arriving (through one zone? through many zones?) The specific zone you should watch isn’t a question I can answer, but if you see cache compactions anywhere, you should be watching closely. In my experience, cache compactions tend not to occur frequently if they occur at all.

It’s also worth mentioning that things appear to have changed a bit with SharePoint 2010. In my SP2010 farm, each of the Web app-specific counter columns contains no data — just dashes (“—“). All of the publishing counters roll-up under the “_Total” column. Admittedly, my (home) SP2010 environment doesn’t see a lot of traffic. Perhaps my environment is underutilizing some capabilties or isn’t configured optimally, but I hope that what I’m seeing is a sign that it’s easier to read and interpret counter values with SP2010.

It’s not much, but I hope it helps!

Sean, the article is excellent and informative. seldom we find such a detailed and proficient content. Well on the same line, at on of our client’s sharepoint farms surprisingly cache hasnt been enabled. And i am through a process of understanding the pros and cons of different caching techniques. your article certainly adds a resource to my ambit.

I also looked at the Blob cache technique. Blob cache and object looks to be a solution to over come the problem of dead slow applications here. A couple of questions below….

But i would like to know if there are any contraints or points to be noted( which might drive me back home) if both Blob cache and object cache are implemented.

Object cache refers to the queries (most of the times). So what if the list data is changed. Does the Cache get flushed and filled( irrespective of the Cache expiry time).

A point noted from the above article.. Cache flush, fill and serve is a costly operation. So if the list which gets frequently updated is cache enabled – sounds like an adverse effect. Can you throw some light on this.

Thanks

sreeharsha,

Thanks for the feedback; I’m glad that you found the article useful!

You asked about the object cache getting flushed if list data changed. The answer to that question depends on your selection for the “Cross List Query Cache Changes” option on the Object cache settings page (from within Site Settings for the site collection). You have two options available to you: “Check the server for changes every time a cross list query runs” and “Use the cached result of a cross list query for this many seconds.”

If the former option is selected, then a change check is made each time a query is run to determine if data is stale; if it is, then the cache is flushed and refilled. If no changes have been made, then cached data is used and better performance is obtained.

If the latter option is selected, cached data is used regardless of whether or not list item data changes have taken place. This results in the best overall performance, but it does come at the cost of some potentially stale data.

Since the data that the object cache holds in memory isn’t supposed to change that often (certain web properties like Title, navigational data if you’re using something like the PortalSiteMapProvider, cross-list/cross-site query information), caching without change checks is usually deemed acceptable. The performance improvements gained outweigh the potential negatives of stale data and having to check for changes on every request. Microsoft drew this conclusion in the move from SharePoint 2007 to SharePoint 2010, as well; in 2007, the server was checked for changes every time a cross-list query was run by default. In 2010, the default is to cache cross-list results for a fixed period of time without change checks.

To your final point: yes, cache flush and fill is an expensive process. If you have absolutely no tolerance for stale data, then you really don’t have any option and need to check the server for changes with each query. In the majority of the environments I’ve seen, though, data turns over frequently but can survive some small amount of “staleness.” A cache duration of 60 seconds isn’t that much to tolerate from an end-user perspective, but that amount of time can save a tremendous amount of back-end processing in environments where large numbers of queries are being run (e.g., a site with many Content Query Web Parts that are rolling up data) to display data.

Remember: even if a user goes to edit data, they’ll be going directly to the lists and libraries housing it — and that data will be up-to-date regardless of the state of the object cache.

I hope that helps!

Sean,

Thanks for your time and the detailed information. A point to note , when the option “Use the cached seconds for these many seconds is selected” – What happens in the background during and this time.

1. Are the updated results pulled into the cache (Like wise in Blob cache) or

2. The query hits the actual data and refills the cache?

Can you please shed some light on this.If any article of your explaining this would be of great help.

Thanks.

sreeharsha,

I believe (but I haven’t confirmed) that the object cache flushes and fills based on user requests. If a user makes a request for data, that data gets retrieved and then stored by the cache. If data expires, the expiration is acted upon with the next user request that acts on that data. There isn’t an “active process” managing the cache.

The BLOB cache is actually somewhat different. I’ve dug into the internals of the BLOB cache in a previous post (http://sharepointinterface.com/2009/06/18/we-drift-deeper-into-the-sound-as-the-flush-comes/), and I can tell you with certainty that the initial population of BLOBs into the cache is driven by user request. After that, though, there is an active cache maintenance thread (in the application pool’s worker process) that wakes up at regular intervals, compares change token information for the cache with that in the associated site collection, and then proactively re-fetches updated content items.

This active maintenance of the BLOB cache by a separate thread is one of the reasons why you shouldn’t just delete objects out of the BLOB cache, as well. If the BLOB cache needs to be cleared across farm servers, SharePoint 2010 makes it pretty straightforward with some PowerShell (Steve Sheppard has a nice script here: http://blogs.msdn.com/b/steveshe/archive/2010/08/02/how-do-i-reset-the-disk-based-blob-cache-in-sharepoint-2010.aspx). In SharePoint 2007, flushing BLOB cache’s across the farm requires either an add-in (like my CodePlex add-in: http://blobcachefarmflush.codeplex.com) or manual action (http://sharepointinterface.com/2009/10/30/manually-clearing-the-moss-2007-blob-cache/). Flushing through the object model is preferable; manual clearing should really only be a last resort.

I hope that helps!

– Sean

Thanks alot for the info Sean. This certainly helps !!